Published on January 07, 2026

If AI cannot read your website, your brand effectively does not exist — no matter how high you rank on Google.

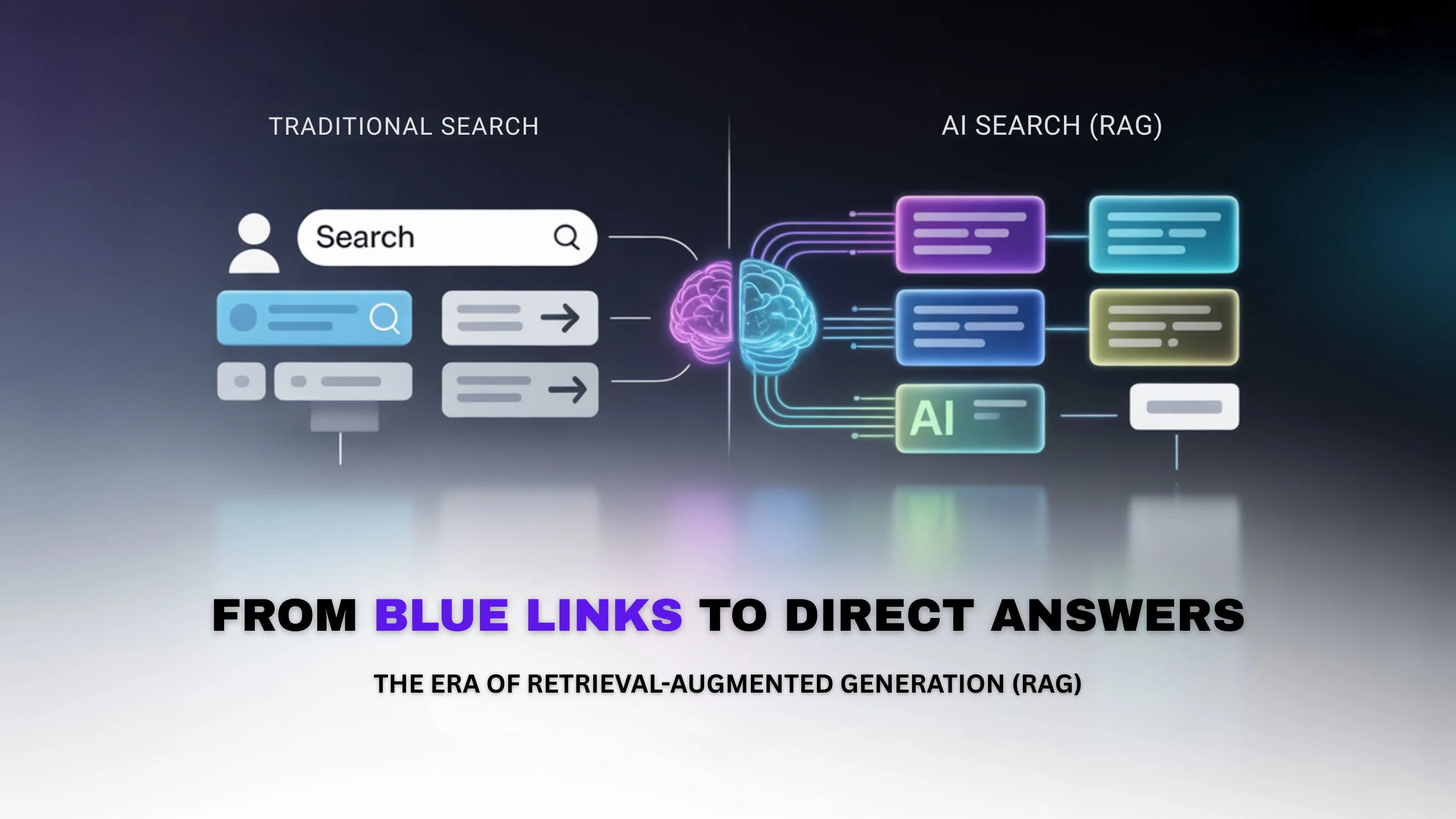

Search has shifted from blue links to direct answers. This article explains exactly how modern AI systems decide which websites deserve visibility — and which are ignored.

From Blue Links to Direct Answers: The Era of Retrieval-Augmented Generation (RAG)

For over two decades, search engines operated on a simple, unspoken promise: Type a query → Get ten blue links → Click and read.

That era is effectively ending.

Modern AI systems have evolved beyond acting as mere directories of web pages. They are no longer just search engines; they are Answer Engines. Instead of offering you a list of potential solutions and asking you to decide, they retrieve relevant information, synthesize it, and generate a direct, human-like response.

This architecture is known as Retrieval-Augmented Generation (RAG), and it represents a fundamental shift in how the internet works.

- Old Search: “Here are some pages. You decide which one is best.”

- New AI Search: “Here is the answer. I’ve already decided for you.”

For businesses and content creators, this shift changes everything—from visibility and SEO to how authority is built.

The “User” vs. The “Crawler”: A Critical Distinction

To understand how AI tools see your website today, you must distinguish between two very different data paths. Many misconceptions about AI SEO stem from confusing these two concepts:

1. Training Data (The Past – Static)

This is the information collected during the initial model training. It has a specific cutoff date and is used to teach the AI language patterns and reasoning. Crucially, your recent content is NOT here. This data is static and does not determine whether your site appears in an answer to a breaking news query today.

2. Live Retrieval (The Present – Dynamic)

This happens at the exact moment a query is made. The AI uses search indexes and live crawlers to pull real-time web content, deciding which sources to trust and cite immediately.

This article focuses on Live Retrieval. Why? Because this is the mechanism AI tools use to access your site right now to answer user questions.

Why This Shift Matters for Your Business

In the world of classic SEO, the equation was simple:

Ranking = Traffic = Opportunity

In the emerging world of AI Search, the stakes are different:

Inclusion = Existence Exclusion = Invisibility

If your website is not crawlable by AI bots, not structured for easy extraction, or not written in answer-friendly formats, AI may skip you entirely. You might still rank #1 on a traditional Google search result page, but in the AI answer box, you could be completely invisible.

The Landscape: How Major AI Tools Retrieve Data

Not all AI engines function the same way. Understanding their retrieval methods is key to optimization:

- ChatGPT: Relies on Bing Search combined with its own crawler (OAI-SearchBot). Success here requires solid Bing SEO fundamentals and an AI-readable site structure.

- Gemini: Deeply integrated with Google’s core search index. To win here, you need strong traditional Google SEO and high "Helpful Content" signals.

- Perplexity: Operates as a Hybrid Answer Engine, utilizing Google, Bing, and custom crawling. It prioritizes citation-worthy content and multi-engine visibility.

The Strategic Takeaway

The game has changed. You are no longer optimizing solely for rankings; you are optimizing for being chosen as a source.

The future of visibility isn’t asking, "Can I rank for this keyword?" It is asking, "Can AI confidently use me to answer this question?"

That single shift in perspective must dictate your content structure, technical SEO, and authority-building strategies moving forward.

Deep Dive: Inside the Three Major AI Engines

To optimize effectively, we must stop treating "AI Search" as a single entity. The three market leaders—ChatGPT, Gemini, and Perplexity—operate on fundamentally different architectures. Understanding these differences allows you to tailor your content strategy for maximum visibility on each platform.

II. ChatGPT: The Federated Searcher

ChatGPT does not crawl the web independently in the same way Google does. Instead, it operates using a two-layer "Federated Search" approach:

- Discovery Layer: It uses Bing’s index to identify relevant URLs for a query.

- Reading Layer: It visits those specific selected pages to extract answers.

Think of this process as “Find first, then read selectively.”

The Bot Ecosystem: Who Is Actually Visiting?

ChatGPT employs specific user agents for different tasks. Distinguishing between them is critical for your robots.txt strategy:

- OAI-SearchBot: Used for live search features. It obeys robots.txt and is the primary agent responsible for surfacing your content in current answers.

- ChatGPT-User: Triggered explicitly when a human user asks ChatGPT to "browse this link." It acts like a standard human browser.

- GPTBot: Used only for training future models. It is not used for live answers or citations.

Key Takeaway: Blocking GPTBot prevents your data from training future models, but it does not block ChatGPT from citing you in live answers today.

How ChatGPT “Reads” Your Content

When ChatGPT visits your site, it uses headless browsing with limited resources (strict timeouts and memory caps). Its extraction process is ruthless:

- Ignores: Navigation menus, ads, popups, sidebars, and widgets.

- Focuses On: The

content tag, headings, and paragraphs.

The "Above the Fold" Rule: ChatGPT prioritizes direct answers found within the first 1,000–2,000 words. If your key insight is buried in an accordion, hidden in a footer, or spread across multiple tabs, it is often skipped. Best practice: Place clear answers early, under explicit headings.

III. Google Gemini: The Index-Native AI

Gemini operates with a distinct advantage: it lives inside Google Search.

Unlike competitors that rely on third-party indexes or live crawling, Gemini pulls directly from Google’s pre-indexed, cached web. The rule is simple: If Google Search can see it, Gemini already knows it.

Crawlers & Control

- Googlebot: The same crawler used for traditional SEO powers Gemini’s retrieval.

- Google-Extended: A specific token for robots.txt that allows you to control whether your content is used for AI model training. Crucially, using this does not affect your traditional search rankings.

The Context Window Advantage

Gemini’s defining feature is its massive context window (supporting 1M+ tokens in versions like 1.5 Pro). While other AIs might "forget" content as they scroll down a page, Gemini can:

- Read entire long-form articles.

- Parse complex PDFs.

- Understand massive code blocks.

- Maintain context from the first sentence to the last.

Additionally, because it uses Google’s full rendering stack, if a page is indexed in Google Search Console, Gemini can render and understand its JavaScript content without issue.

IV. Perplexity: The Answer Engine

Perplexity operates as a "Search Wrapper." It aggregates results from the Google and Bing indexes, then adds its own real-time processing layer to synthesize an answer. Its primary goal is not just to answer, but to provide verified answers with citations.

Crawler Behavior: Speed Over Visuals

PerplexityBot is optimized for speed. It often captures raw HTML snapshots and performs minimal JavaScript rendering. It prioritizes fast text extraction over visual accuracy.

Citation-First Ranking Logic

Perplexity's ranking algorithm rewards declarative, fact-based content. It looks for sentences that are easily quotable.

- The Logic: If a sentence can be "Copied + Cited" directly, it ranks higher.

- The Format: It strongly prefers clear, factual statements over opinionated commentary.

The Role of Structured Data

Because it acts as a wrapper, Perplexity relies heavily on structured data to parse information quickly. It looks for Schema markup such as:

- FAQ

- How To

- Article

- Author

This data helps Perplexity instantly distinguish between a verified fact and a random opinion.

A Note on Access: Perplexity has faced criticism regarding its compliance with robots.txt and use of IP masking. While the company claims compliance, many publishers explicitly block PerplexityBot. This remains a key decision point for site owners balancing visibility against control.

Generative Engine Optimization (GEO): Practical Strategies That Actually Work

We have covered how the shift to AI search happened and who the major players are. Now, we turn to the most critical question: What do you actually do about it?

This is the playbook for Generative Engine Optimization (GEO)—the practice of structuring content so AI systems like ChatGPT, Gemini, and Perplexity can understand, trust, and reuse it in generated answers.

1. Optimize for Fact Density (The Hallucination Killer)

Why It Matters: Large Language Models (LLMs) function on probability. When they encounter vague claims, the risk of "hallucination" (inventing facts) increases. Precise, dense facts act as anchors, forcing the AI to stick to the truth—your truth.

How to Do It:

- Be Specific: Use exact numbers, dates, locations, and version numbers.

- Name Names: Explicitly mention proper nouns (specific tools, company names, industry standards).

- Kill Fluff: Avoid generic adjectives like "industry-leading" or "fast" unless backed by evidence.

The Comparison:

❌ Bad (Vague): "Our software is fast and reliable."

✅ Good (Fact-Dense): "Our software processes 10GB of data in 3.4 seconds, tested on AWS c6i.4xlarge instances (Jan 2025)."

Rule of Thumb: If a sentence can be questioned with "How much?", "When?", or "Compared to what?" — you need more data.

2. The Inverted Pyramid: Writing for AI Readers

Why It Matters: Humans might skim, but AI crawlers have "token limits." Tools like ChatGPT and Perplexity often stop parsing deep content after the first 1,000–2,000 tokens (roughly 750–1,500 words) per fetch. If your answer is at the bottom, they might never see it.

How to Structure Content: Adhere strictly to a Top → Bottom Priority:

- Direct Answer: The "What" and "Why" must be in the first 100–200 words.

- Supporting Explanation: The "How."

- Details & Edge Cases: The deep dive.

Example Structure:

- H1: What Is Generative Engine Optimization?

- [Direct definition + 3 key bullet points immediately]

- H2: Why GEO Matters in 2025

- H2: Step-by-Step Strategies

Golden Rule: If the AI reads only the first screen of your page, it should still have enough information to construct a complete answer.

3. JavaScript, Rendering & Visibility Traps

The Hidden Problem: While Google is good at rendering JavaScript, many other AI crawlers are not. If your content relies on client-side rendering (React, Vue, Angular) to load text, an AI bot might just see a blank page.

Best Practices:

- Server-Side Rendering (SSR): Ensure your core content is delivered as raw HTML.

- Don't Hide the Goods: Avoid burying key text behind accordions, tabs, or "click to load more" buttons. If it's not in the initial HTML snapshot, treat it as invisible to AI.

Quick Test: Right-click your page and select "View Page Source." If your main article text isn't visible in that code block, most AI crawlers can't read it.

4. Schema.org as an AI “Translator”

What It Really Does: Schema markup isn't just for getting stars in Google search results anymore. For LLMs, it acts as a semantic translator. It tells the AI, "This block of text isn't just a paragraph; it is a verified Answer to a specific Question."

High-Impact Schemas for GEO:

- Article: Establishes authorship and dates.

- FAQPage: Directly feeds Q&A formats to bots.

- HowTo: Provides step-by-step logic for instructional queries.

Tip: Match your Schema Q&A exactly with the visible content on your page. Discrepancies reduce trust.

5. Future Outlook: Where GEO Is Headed

A. The Agentic Web

We are moving from an era where AI reads content to one where AI acts on it.

- The Request: "Book this flight" or "Compare these tools and sign me up for the best one."

- The Requirement: Websites must expose clear actions, structured steps, and API-friendly flows to allow agents to complete tasks on behalf of users.

B. The Death of the Click

Users are increasingly satisfying their intent without ever visiting a website.

What This Means for Brands:

- Traffic ≠ Visibility: You can lose traffic but gain brand awareness.

- New Metrics: Success will be measured by "Share of Model" (how often you are cited) rather than just pageviews.

- Authority > Rankings: Being the trusted source that the AI cites is more valuable than being the third blue link.

Final GEO Checklist

Before publishing, ensure your content hits these five marks:

- ✅ Fact-Rich: Is every claim backed by specific data?

- ✅ Front-Loaded: Is the direct answer in the first 200 words?

- ✅ HTML-Ready: Is the content visible in "View Source" (SSR)?

- ✅ Schema-Tagged: Are FAQ and Article schemas implemented?

- ✅ Re-Usable: Is the content written to be cited, not just read?

Don’t let your website stay invisible to AI. Our expert SEO team at Adcliq360 specializes in Generative Engine Optimization (GEO) to make your content AI-readable, trusted, and cited.